Setup an Athena Data Lake

# Setup an Athena Data Lake in Two Minutes

In this tutorial, we will show you how to setup an Athena Data Lake. The basic concept is that you have JSON SerDe or Parquet data files in an S3 bucket, and that you can then query them using a SQL.

# Setup your data

The first step is to create an S3 Bucket. You can name this whatever you want. In the example below, it is called commandeer-dev-analytics. The data for page views is stored as JSON files. Secondly, add some test data to it. Note that the folder names are the partitions.

/* file structure */

page-view/partitiondate=YYYY-MM-DD/userid=USER_GUID

PAGE_VIEW_GUID.JSON

/* sample data */

{

"id": "260b3231-dafb-4c58-8945-7cb90178d870",

"name": "dashboard",

"userId": "3c19c5e6-f1bd-4e5c-92f3-a035e64d0acc",

"createdAt": "2020-08-06T02:34:04.436Z"

}

.png)

Page view S3 data lake

# Create the Athena table

You can create Athena tables by hand or by using the ETL Glue Crawler on AWS. It looks very similar to a standard SQL table, but notice two important distinctions The first is that the indexes are in the PARTITIONED_BY section, which correlates to your folder structure. The second thing is the LOCATION which specifies where this data is pulled from.

CREATE EXTERNAL TABLE `page_view`(

`id` string COMMENT 'from deserializer',

`name` string COMMENT 'from deserializer',

`userid` string COMMENT 'from deserializer',

`createdat` string COMMENT 'from deserializer',

`params` string COMMENT 'from deserializer',

`leadid` string COMMENT 'from deserializer')

PARTITIONED BY (

`partition_date` string,

`user_id` string)

ROW FORMAT SERDE

'org.openx.data.jsonserde.JsonSerDe'

WITH SERDEPROPERTIES (

'paths'='createdAt,id,leadId,name,params,userId')

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

's3://commandeer-dev-analytics/page-view/'

TBLPROPERTIES (

'CrawlerSchemaDeserializerVersion'='1.0',

'CrawlerSchemaSerializerVersion'='1.0',

'UPDATED_BY_CRAWLER'='commandeer-analytics-crawler',

'averageRecordSize'='241',

'classification'='json',

'compressionType'='none',

'objectCount'='157',

'recordCount'='157',

'sizeKey'='42375',

'typeOfData'='file')

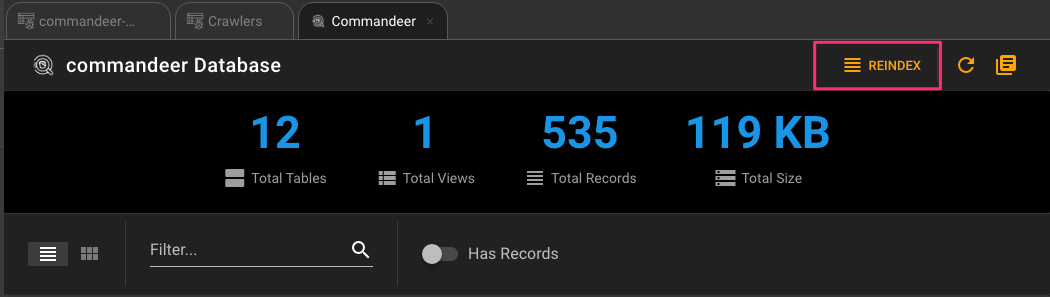

# Index your Data

This is a crucial step in setting up Athena. The indexes are based on the folder paths for each file, and indexing needs to occur in order for your SQL to find the data. You can run this from inside Commandeer on the database page. For our analytics system, we have a lambda that runs nightly on CloudWatch Rules CRON that reindexing our data.

Reindex an Athena Database in Commandeer

# Run your Queries

You should now be all setup. To run your queries in Commandeer, you simply need to select the bucket where the output gets written to. In our case it is the commandeer-dev-athena bucket. Every query you run, and it's accompanying results get put here. Below you can see a simple query to select all page_views on August 6th, 2020.

.png)

# Conclusion

Creating a data lake on AWS has never been easier. Remember, how you structure your data is the most important part. Because you only pay for the read operations ono S3, you want to make sure that you query your data using partitions. If you try to query a really large 'table' of data that has to scan all partitions, you will have to pay more than querying just based on say the partition_date. During our nightly batch processing, we summarize data for the previous day only, so that we only incur the same minimal cost day in and day out.